To characterize the database, first do some sampling and hand-matching to estimnate the degree of matching within the database. That is to say, if there is a quick and dirty way to say "these two videos can't be matches', then we should use it first, before we even start to confirm a match. However, the more likely case is that matches will be rare, so we will want to minimize the cost of an unsuccessful match. If 100% of the videos match some other video, then we will want to minimize the cost of a successful match. The optimal algorithms for this process will greatly depend on the characteristics of the database, the level to which single and multiple matches exist. So our primary goal should be minimizing the costs of the last step, even if it means adding many early, simple steps. The cost of the last step must be worse than O(1), potentially much worse. The cost of the first two steps is O(1) on the number of videos. Finally, the comparisons needed to match all videos to each other must be performed. Once the extracted data is available, then the cost of performing a comparison must be considered. One key cost to consider is that of extracting the data needed from each video for whatever comparison metrics are to be used. So we really care about the local cost of each comparison in several different domains: 1) Data storage, 2) compute resources, and 3) time.

And the size of the database likely makes the use of cloud computing resources unfeasible. It is important that the comparisons be performed using the compute and time resources available: I doubt a solution that takes months to run will be very useful in a dynamic video database. This is a huge problem, so I've chosen to write a rather lengthy reply to try to decompose the problem into parts that may be easier to solve. Or am I going the completely wrong way? I think I can't be the first one having this problem but I have not had any luck finding solutions.

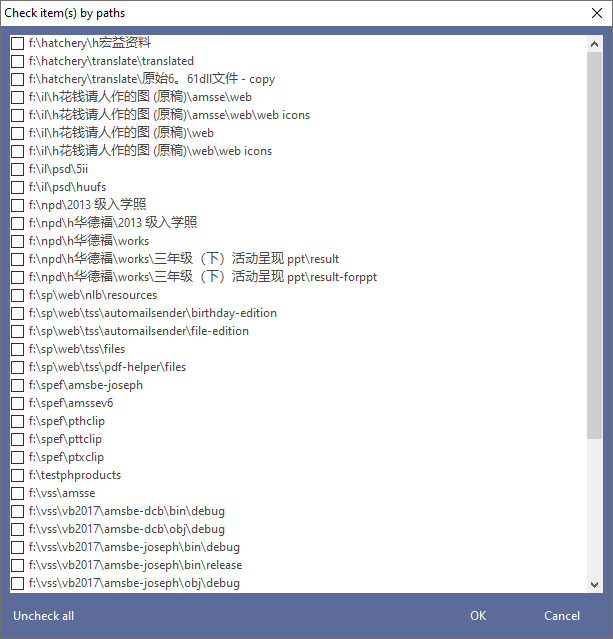

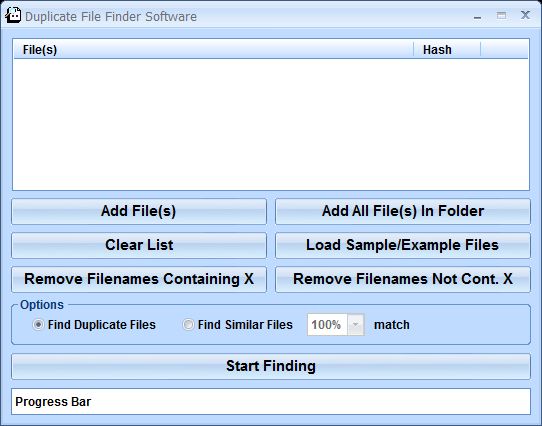

Or are cut off at the beginning and/or end. They can have different quality, are amby cropped, watermarked or have a sequel/prequel. Problem is the videos aren't exact duplicates. Now I need some sort of procedure where I index metadata in a database, and whenever a new video enters the catalog the same data is calculated and matched against in the database. With every video file I have associated semantic and descriptive information which I want to merge duplicates to achive better results for every one. I got a project having a catalog of currently some ten thousand video files, the number is going to increase dramatically.

0 kommentar(er)

0 kommentar(er)